Workflows:

Archiving takes place according to defined workflows from ingest to dissemination

ESPRI responsibilities include reformatting and applying the quality assurance to IPSL data, providing usage statistics; as well as maintaining multiple copies of the data (i.e., replicas) with data discovery and distribution services. Consequently, ESPRI develops necessary services that encompass all aspects of the data lifecycle, from data ingestion to data dissemination. Procedures are described according to the data stream in the ESPRI Data Management Plan.

The datasets managed by ESPRI are produced in the course of scientific projects that require specific Data Management Plans co-authored by the partners and detailing each step of archival data transformation and the role of the different stakeholders (for instance see AWACA DMP in relevant links). Procedures are described according to the dataset. For more information, please refer to the workflow diagram in relevant links. Data management guidelines provide data producers with clear instructions on how to properly prepare, document and submit their data to the corresponding platform. Guidelines are made available to the data providers to help them format the data and complete the necessary metadata.

Checks are carried out to ensure that automatic or semi-automatic workflows have been completed satisfactorily. The repository checks that workflow steps have been completed successfully (jobs executed correctly, no errors, manual verification if necessary). Procedures are documented and some are automated as described in the DMP in the section “procedures”.

Data ingestion

Whatever data is produced by the IPSL community or replicated at its request, a deposited data must conform to the ESPRI DMP or a DMP co-authored by at least one ESPRI data manager.

Data is stored following the requirements set out by the DMP in terms of directory structure, versioning or file naming (e.g., CMIP/CORDEX). To comply with DMP rules, data is managed using associated/recommended community tools triggered through automatic or semi-automatic workflows.

This ensures qualitative and quantitative checking of IPSL outputs before being published.

Data curation

Data curation relies on the versioning strategy described in the DMP of the datasets that are either replicated by ESPRI or in which IPSL contributes to.

For instance, climate simulations are superseded by new runs over time. Users are automatically notified by email or by using a “latest” symbolic link on the filesystem. Older/deprecated versions are temporarily kept locally in order to guarantee the reproducibility of recent analyses made by IPSL users, even if the original data has been unpublished. Deprecated dataset versions can be removed/unpublished to save disk space for newer datasets on a 7-year cycle and if the data changes have been documented by the depositors on any errata service. The data curation strategy also depends on the frequency of access to data that have been replicated “on demand”. ESPRI preserves the replicated data for a long-enough period to ensure reproducibility of the analyses it underlies.

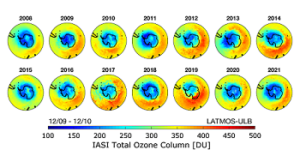

Similarly, regarding observational datasets, data curation occurs on a version-based strategy : datasets are superseded by new versions over time.

If a DOI has been registered on the dataset, the information on the landing page is updated and a new DOI is set for the new version of the dataset.

Data dissemination

Data and metadata dissemination at ESPRI is accomplished through a comprehensive set of three web services :

- The IPSL ESGF node as the entry point for the climate data catalog,

- The IPSL meta catalog,

- The AERIS data catalog contains ESPRI observational referenced datasets.

These services provide a wide variety of well-documented capabilities. Furthermore, they also provide the necessary and relevant links for users guidance.

On demand of the depositor or following the DMP requirements, ESPRI can restrict data access by applying standard authentication layers (login/password, Unix permissions, OpenID, OAuth) to comply with assets property.

Constant attention is paid to workflow efficiency. Detected problems are reported by users on a dedicated mailing list. Critical issues are discussed in monthly ESPRI meetings which include the whole ESPRI staff in order to determine and validate the necessary changes to the workflows in place.

ESPRI staff includes a variety of profiles with several domains of expertise on a large scope of environmental data allowing:

- To share and learn from the workflows processes (e.g., a pipeline handling climate simulation can be made suitable for observational dataset and benefit of best practices)

- To solve issues at any stage of the workflows.