Documented storage procedures:

The repository applies documented processes and procedures in managing archival storage of the data

Data storage and management processes (i.e., ingestion, organization, replication, versioning, etc.) were developed and are maintained by the ESPRI staff.

Each procedure is presented as a private, dated and versioned web page with one or more authors and keywords in order to be easily found by other ESPRI engineers. Each procedure briefly describes its objective, the adjoining and relevant documentation, then lists the technical requirements (e.g., the machines to which it applies, the software, libraries and dependencies required). This is followed by a step-by-step list of commands or scripts to run and checks to complete the procedure. For example, ESPRI has a specific procedure for deploying and updating the ESGF stack used to distribute data from climate simulations. Following this template, ESPRI has, for example, a procedure for applying quality checks or deploying and updating the ESGF stack used for the distribution of climate simulations.

The procedures are applied by clearly identified and qualified ESPRI engineers at each step of the Data Management Plan. There are therefore no quality assurance steps to ensure the procedure has been carried out as described.

Regarding the storage procedure and in addition to its Data Management Plan, ESPRI data repository is distributed on two of the three geographical sites of the ESPRI infrastructure: at the Sorbonne University and at the Ecole Polytechnique. In addition of such multi-site implementation, ESPRI has dedicated network links created in 2018 and 2020 with two GENCI HPC centers both located in the Ile-de-France area: the Très Grand Centre de Calcul (TGCC) of the French Atomic Energy Commission (CEA) and the IDRIS.

Referenced data of high interest of the designated community (e.g. CMIP climate results or ERA reanalysis) is hosted by our HPC partners who ensures a high service level agreement through in-house storage procedures and dedicated IT staff. ESPRI data managers closely collaborate with HPC partners IT teams through quarterly meetings. This ensures our team to keep a clear and global view of the storage capacity, its status and mid-term evolution. This geographical distribution of the ESPRI data center CDC makes it possible to also offer a redundancy of thematic and technical expertise and skills of the IT staff from IPSL laboratories involved in the development and maintenance of ESPRI services.

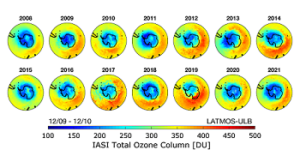

Although ESPRI does not have highly sensitive data, different backup strategies are applied to the ESPRI datasets. Observational datasets of high interest for the designated community or acquired by IPSL Observation Center (SIRTA) are daily mirrored in the two ESPRI data sites with in-house automated processes based on the “rsync” Unix tool. A subset of core observational datasets is also regularly backed up on a tape system for long-term preservation. Climate simulations are duplicated at the European and international levels: identified IS-ENES and ESGF partners as the British Center for Environmental Analysis (CEDA), IPSL (France), the German Climate Computing Center (DKRZ), the Australian National Computational Infrastructure (NCI) or Lawrence Livermore National Laboratory LLNL (LLNL, US) continuously replicate climate model results from other modeling groups in the federation in distributed RAID system fashion. This replication strategy relies on the ESGF download client developed by ESPRI and uses the GridFTP transfer protocol. ESPRI provides data access to a subset of its climate model replicas to the Copernicus Data Store of the ECMWF in an operational way. This includes a risk management table which accommodates the replication strategy and led to adaptation of the ESPRI data curation policy and the versioning mechanisms.

Risk management are addressed by ESPRI engineers through continuous monitoring of the storage resources on-site: a low level supervision of the services (state of controllers, supplies, logical, physical and virtual disks, fans, temperature, etc.) as well as a supervision of the high level services are made by Nagios probes and warn in real time in case of critical alert due to a system failure.

Whatever the backup processes described above, all replication procedures include systematic control of the file checksum to ensure consistency across archival copies on-site or in our partners filesystems.

Media storage is all supported by hardware under warranty. Any storage disk is monitored and renewed before the warranty expires. In the worst case scenario, most of our datasets can be retrieved from backup solutions or from our partners replicas.